A New Beginning

Testers constantly encounter new trends in the experimental branches of Linux distributions. I look at who drives Linux forward and how the future is shaping up.

Lightwise, 123RF

Testers constantly encounter new trends in the experimental branches of Linux distributions. I look at who drives Linux forward and how the future is shaping up.

The Linux operating system kernel will celebrate its 25th birthday this coming summer. During its lifetime, a fantastic variety of digital ecosystems have developed around both the kernel and the GNU software suite. In the meantime, developers continue to work carefully and in well-defined increments on the kernel itself. There are no huge leaps forward. Instead, steady improvement done in manageable steps is preferred.

The term "Linux" has taken on various meanings in popular usage. In this article, however, the term refers to the Linux kernel and the surrounding distributions. Here, I will look at the question of who has set the technology and idealogical standards for development in the past, and who will continue to do so in the future.

The development occurring over the past few years reflects the adage that it's not always necessary to reinvent the wheel. Developers are using and extending older technologies in new ways. An example of this is systemd, a tool that has become established in the kernel, using functions like namespaces and Cgroups as its essential components (Figure 1).

Wayland [1] is a new display protocol that also exemplifies modernization by evolution. Like many core functions, Docker and CoreOS rely on time-proven ingredients like BSD jails [2] and Solaris zones [3]. The filesystem Btrfs picks up the torch from SunZFS [4], making use of RAID and integrating Snapshots [5], as well as the Solaris zones model.

Even /usr merge [6], a scheme already executed in some distributions (Figure 2) and on the cusp of being executed in others, takes it cues from the direction Solaris took some 15 years ago. The underlying problem and the contemporary directory tree that the Filesystem Hierarchy Standard (FHS) [7] is built on date back to the mundane storage problems that Unix creators Ken Thompson and Dennis Ritchie encountered back in 1970 [8].

Therefore, it's a good idea to investigate the roots of ideas that appear to be innovative. Sometimes, all that is actually involved is a long-delayed correction to a past shortcoming.

Many genuine innovations have their start inside a corporation. At the very least, corporate settings have been responsible for adding improvements. Thanks to this pattern of innovation, the large distributions from the 1990s continue to survive. SUSE originated in 1996 with roots in Softlanding Linux System (SLS) [9] (Figure 3) and Slackware [10]. Debian had already been founded by 1993. In that same year, Red Hat was launched by developers in the United States. Later, Red Hat conceived an industrial strength distribution – Red Hat Enterprise Linux (RHEL) – and drove it forward in parallel with development of the community distribution Fedora.

Ubuntu appeared for the first time in 2004, along with the promise that it would revolutionize the Linux desktop. Since then, people have been waiting not only for the proverbial Godot but also for the "Year of the Desktop." And, they have been waiting in vain. Linux has developed in line with its usage. It is still not a unified product like Microsoft in spite of the many innovations that have been made.

Aside from Debian, the distributions referred to belong to companies that earn money with Linux. This explains the ongoing 23 years of performance by Debian. Even today, there exists a flock of countless Debian developers who have no financial interest in the project; they maintain it as a Do-o-cracy (in which you have a say if you participate – and the more you participate, the greater your say).

The developers work together according to a set of rules and guidelines. The most important among these are the Debian Manifesto [11] and the Social Contract [12]. The latter is a type of contract, which, among other provisions, incorporates the Debian Free Software Guidelines (DSFG) [13]. The developers adopted these guidelines in 1997 and revised them in 2004.

The rules and guidelines for the project have taken on importance beyond the scope of their original use. Many derivative projects use them as a standard for working on the development of open source software and also for getting along in a developer community. In this way, Debian has contributed a great deal in terms of ideology for working on open source software.

The approach on the technology side of the project is by contrast leisurely. Stability is the primary focus – not a rush for innovation's sake found elsewhere. Even so, Debian has recently shown itself more inclined to adopt innovation from the outside. The change to systemd (Figure 4), however painful, is an example. Currently, developers are laying the groundwork for /usr merge . As usual, a lot of discussion is taking place, yet things are moving forward.

Red Hat no doubt stands at the forefront of innovative development for Linux. This has been especially true for the past five years during which Red Hat developers have developed significant amounts of software that has advanced the evolution of the Linux universe. In the case of systemd, the developer Lennart Poettering has been the driving force, but there is no general consensus. This is true even though the new init system has largely been successful in asserting itself with the distributions.

A small but vocal minority, however, views the project as a betrayal to Unix and considers Red Hat an evil force that tries to control Linux. With all due respect for the criticism, the vast majority of users believe that systemd advances Linux because it gets rid of dead wood and unifies many aspects among the distributions.

The people at Red Hat have been talking about stateless Linux [14] since 2004 (Figure 5). The discussion has grown along with continued development, but so far implementation of the idea remains far off in the future. The desired results, on the one hand are that developers, distributions, and users should come closer together when new versions of packages are involved. On the other hand, more unified standards should make it easier for developers to test improvements.

For example, the technology makes it possible for a corporation to connect relevant images of the operating system for use with a fleet of notebooks to a single, stateless, image on a server. The images on the notebooks are updated when they boot. Device mapper [15] accomplishes the updates atomically. This makes it possible to roll back the system in case of errors. Meantime, the live directory system does not undergo changes.

Stateless Linux should make it possible for distributions to store all relevant files in /usr . These systems should start themselves when the /etc and /var directories are empty. In this case, there is a standard configuration available for systemd. Corresponding systems do not permanently save states. They always start in the same predefined state, and systemd creates files in /etc and /var before those programs that are dependent on the files in /etc start.

Poettering sees the advantage to stateless systems in that software providers no longer have to adapt their programs to the libraries of distributions. Instead, providers can deliver a suitable run-time environment. This makes it possible to install packages regardless of the distribution.

Updates occur atomically and there is allowance for the possibility of rollbacks [16]. Security is increased due to a chain of trust that extends from the firmware via the bootloader all the way up to the kernel. This approach brings distributions from all areas including desktop, server, and cloud closer together. Nonetheless, this has not been cause for celebration in the Linux world. As with systemd, opponents are concerned that some of the standardization envisioned will block other developments.

Kdbus [17] is another building block used by the ranks of systemd developers. However, developers responsible for the kernel have refused to accept Kdbus for the time being. The issue is that a mechanism for interprocess communication (IPC), like D-Bus [18], would need to be anchored directly in the kernel. According to the developers supporting the use of Kdbus, their approach would increase speed greatly and permit the exchange of data between processors in volumes reaching into the gigabits. They maintain that this type of capability would be important for further development of systemd.

These developers are presumably no longer committed to the D-Bus idea for this mechanism after the criticism leveled by Linus Torvalds and others. Instead, they are tending more towards an open architecture.

Poettering gives a concrete example of combining several recent innovations. He describes the possibility of storing and executing multiple operating systems or several instances of a system together with multiple run times and frameworks in a single Btrfs volume.

To illustrate this idea, he sketches out a system in which Fedora, Mandriva, and Arch Linux utilize this model and make corresponding images available. Poettering's sketches assume that developers also adapt the desktop environments and applications. He demonstrates how his ideas can become reality by using sub-volumes in various architectures in a single Btrfs volume. The question of whether different versions of applications like Firefox actually start with Mandriva or Arch Linux becomes irrelevant, because the system assigns an appropriate run time for each of them upon launch.

It will probably take many years for this kind of visionary thinking to become part of the mainstream of the distributions. Even so, an identical concept already exists in a niche distribution called Bedrock. See the article on Bedrock elsewhere in this issue for more information.

The software company Red Hat has legions of developers, some of whom are working on technologies that hold promise for the future. The current release of Fedora (23) includes the possibility of performing firmware updates through Gnome and also XDG apps [19] as a further innovation.

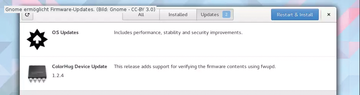

Firmware updates from the system should make the usefulness of components like a USB stick with FreeDOS obsolete when it comes to updating the BIOS on Linux. Using the new technology, it is no longer even necessary to switch into BIOS/UEFI. Instead, the BIOS and other firmware are updated directly through the Gnome app [20] (Figure 6).

Figure 6: Firmware updates that come directly through the Gnome software center would make for greater user convenience.

Figure 6: Firmware updates that come directly through the Gnome software center would make for greater user convenience.

However, the project is dependent on cooperative efforts from hardware manufacturers. The companies will have to store the updated firmware in a database so that the system can automatically find the firmware and notify the user. A glimmer of hope in this regard is that Dell, the world's largest computer manufacturer, has begun to enter its updates into this type of database [21].

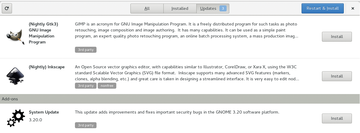

The XDG apps concept is still in the early stages and is included in Fedora 23 as a technology preview (Figure 7). This new app format has been borrowed from cloud computing because it increases security and operability for desktop applications. It also simplifies development processes.

Figure 7: Theoretically, it would be possible to move the Gnome module software as an XDG app between two systems.

Figure 7: Theoretically, it would be possible to move the Gnome module software as an XDG app between two systems.

This is done by executing applications in their own sandbox which, in principle, isolates them from the remainder of the system similarly to what is done with containers. Thus, developers would be able to distribute applications in packages independent of a particular distribution.

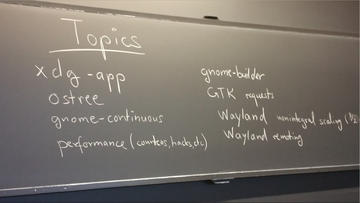

The container format LXC and the OSTree project [22] from Colin Walters serve as the basis for this approach (Figure 8). The latter appears to have borrowed features from Bedrock and the Nix package manager [23] to provide for simultaneous administration of packages of live distributions in a single filesystem.

Figure 8: The OSTree project was one of the important items on the agenda at a meeting of Gnome developers.

Figure 8: The OSTree project was one of the important items on the agenda at a meeting of Gnome developers.

Each program in a system running with XDG apps relies on a clearly defined runtime environment even though different environments can exist in a single filesystem. This results in an intersection between the stateless systems referred to above and the /usr filesystem without mutable content.

Developers of Fedora 24 – scheduled to release in June 2016 – are planning a "Gnome IDE Builder," which is said to be able to package XDG apps. Therefore, the "Gnome Software" program should also be capable of dealing with the new format.

The developers at Red Hat are not alone in taking initiative for setting the direction of the future development for Linux. Canonical is also heavily involved in defining the evolution of Linux. However, most Ubuntu users would agree that innovation has been pretty much missing for the last couple of years. Ubuntu on the desktop has been singularly boring.

This is probably because Canonical has been leaving no stone unturned to deliver genuine convergence in the form of a single foundation of source code for multiple platforms. This approach is different from that taken, for example, by Microsoft Continuum [24]. Canonical's approach is to set a smartphone or a tablet in a docking station and attach a monitor, keyboard, and mouse. Then, voilà, you have an actual desktop. Thus, it will no longer be necessary to use smartphone or tablet apps. Instead, you would use the applications on the Ubuntu desktop (Figure 9).

Figure 9: If the Canonical developers are successful in implementing their ideas, then laptops and desktop PCs will become a dying breed.

Figure 9: If the Canonical developers are successful in implementing their ideas, then laptops and desktop PCs will become a dying breed.

With this vision in mind, Canonical conceived the Snappy package format, which it derived from the click packages of Ubuntu Touch. It is worth noting here that Canonical is probably very optimistic with respect to overcoming outstanding issues. As part of their efforts, the developers created their own display manager, Mir. In combination with Unity 8, this display manager forms the basis of the new platform.

Considering the scope of the modifications, confidence in the future success of the endeavor has been waning. The recently released Ubuntu tablet BQ Aquaris M10 is the first device for the actual use of products based on convergence.

The Snappy package format borrowed heavily from the concepts underlying CoreOS [25] and Red Hat's Atomic Host [26] (Figure 10) and is also used in the Ubuntu Snappy Core. The latter is a lightweight operating system based on the Ubuntu kernel and is intended for gateways, routers, and equipment for the Internet of Things (IoT). It offers atomic upgrades with rollback functionality, making it possible for routers with Snappy Core to continuously receive updated firmware.

At the end of February 2016, Canonical announced it had formed a partnership with the Taiwanese manufacturer MediaTek, who want to use Ubuntu Core on the MT7623 router for the SmartHome [27]. This system on chip (SoC) device offers numerous possibilities for wireless networking and is therefore suitable for use as a control center for the growing number of IoT devices.

Although Canonical is a relatively small company, OpenStack gives it a foot in the door to the cloud computing world. This is how the new format LXD [28] originated as the basis of Linux Containers (LXC). The format is a hybrid composed of a container engine and hypervisor, bringing the best of both worlds together without the overhead of the typical hypervisor.

Mark Shuttleworth has given the name Hypertainer to this new format. There are a number of realistic scenarios that point to its use on the desktop. Intel is heading in the same direction with the Clear Linux operating system, which is designated for use in the cloud. You can read more about Clear Linux elsewhere in this issue.

The innovations discussed here show that the handling of Linux and the creation of distributions are currently in flux, which should drastically change how the operating system is used in the coming decade. Linux companies could well take the lead because they try to meet the needs of their clients. Even so, it is usually the developers and enthusiasts of Fedora – and other distributions that incorporate the latest trends into their updated versions – who perform development, testing, and experimentation.

The changes can already be seen in the package systems. Their archives have become more compatible with one another and also more secure given their isolation from each individual system. The traditional directory tree is gradually being simplified, and the kernel remains an essential foundation while undergoing careful improvement.

Infos